The Checkpoint - Issue #14

What's new

Stability Diffusion beta signup now open – Run, don't walk.

More custom-trained Disco Diffusion models from @KaliYuga_ai – Watercolor Diffusion, Lithography Diffusion, Medieval Diffusion and Textile Diffusion.

Ultra-high-definition image demoiréing (UHDM) – New model that can remove the moire pattern you get when taking a photo of a screen. Project page, Github, paper.

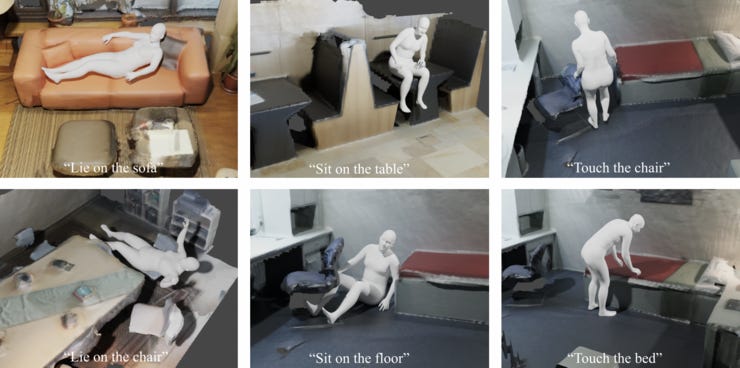

Compositional human-scene interaction synthesis with semantic control (COINS) – Given a 3D scene and a text instruction, COINS can generate realistic human interaction with that scene. Project page, Github.

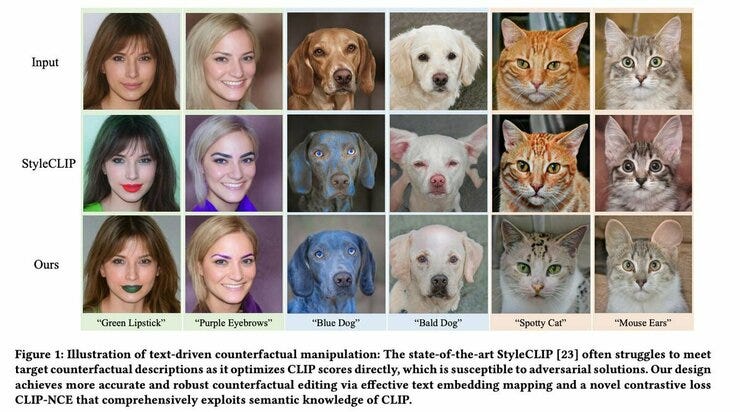

Counterfactual Image Manipulation via CLIP (CF-CLIP) – A new method for accurately editing StyleGAN-generated images with CLIP. Github, Paper.

Featured notebook – CogVideo

The code for the most powerful open source text-to-video model is now available. It requires a serious GPU, such as an A100, but is also available to try out with Hugging Face Spaces.

Get it:

My attempt was... less successful:

Thanks for reading!

If you have anything you’d like to be featured or want to get in touch, give me a shout on Twitter or via email. Please also consider supporting me on Patreon so that I can spend more time creating content like this.